Introduction

We recently encountered issues with our PHP applications at scale in our Kubernetes clusters at AWS. We will explain the root cause of these issues, how we fixed them with Egress Controller, and overall improvements. We also added a detailed configuration to use HAProxy as Egress Controller.

Context

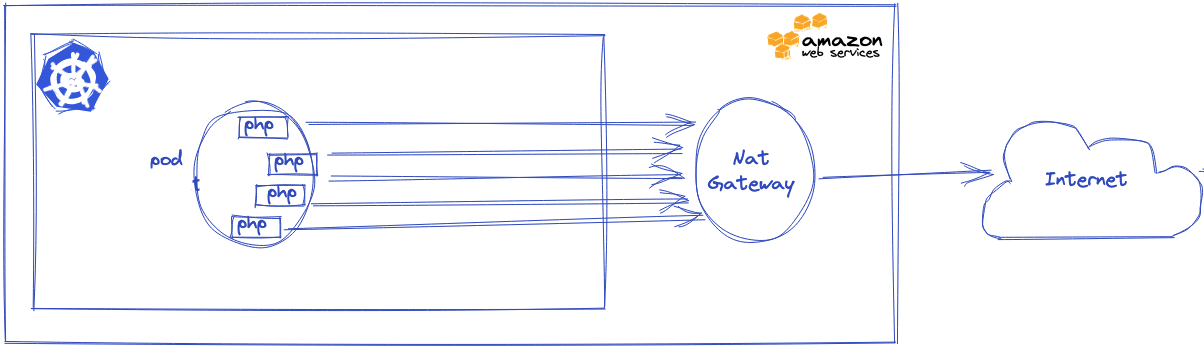

Bedrock is using PHP for almost all of the backend API of our streaming platforms (6Play, RTLMost, Salto, …). We have deployed our applications in AWS on our kops-managed Kubernetes clusters. Each of our applications is behind a CDN for caching purposes (CloudFront, Fastly). This means every time an application needs to access another API, requests go on the internet to access the latter through CDN.

During special events with huge loads on our platforms, we started to see TCP connection errors from our applications to the outside of our VPC.

ErrorPortAllocation source

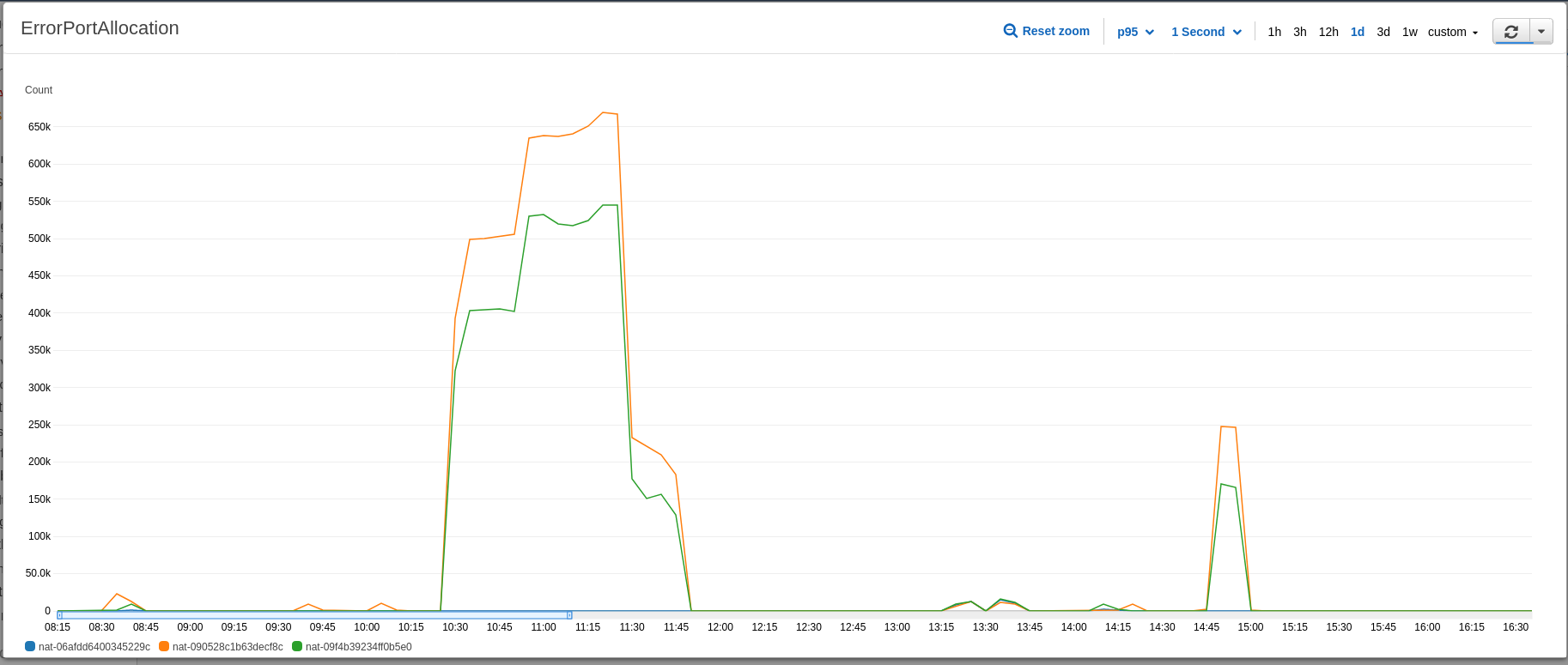

After a few investigations, we saw that TCP connection errors were correlated with NAT Gateways ErrorPortAllocation.

In AWS, NAT Gateways are endpoints allowing us to go outside our VPC. They have hard limits that can’t be modified:

A NAT gateway can support up to 55,000 simultaneous connections […]. If the destination IP address, the destination port, or the protocol (TCP/UDP/ICMP) changes, you can create an additional 55,000 connections. For more than 55,000 connections, there is an increased chance of connection errors due to port allocation errors. AWS Documentation

Our applications always request the same endpoints: other APIs CDN. Destination port, IP or protocol doesn’t change that much, so we start hitting max connections, resulting in ErrorPortAllocation.

At the same time, we found a very interesting blog post: Impact of using HTTP connection pooling for PHP applications at scale, which was a very good coincidence.

As you can read in Wikimedia’s post, PHP applications aren’t able to reuse TCP connections, as PHP processes are not sharing information from a request to another. Recreating new connections on the same endpoints is inefficient: adds latency, wastes CPU (TLS negotiation and TCP connection lifecycle) but also overconsumes TCP connections.

Outgoing requests optimization

Egress Controller

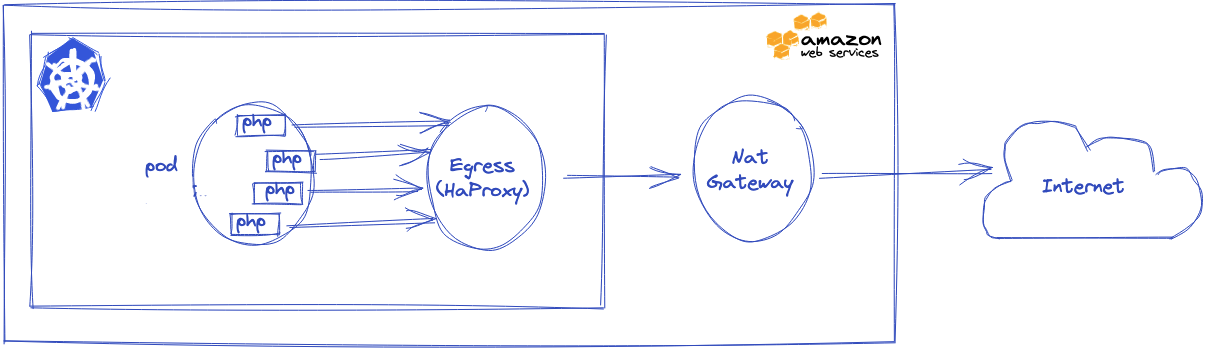

HAproxy is fast and reliable. We use it often and know it well. We already have it as Ingress Controller in our clusters and we know service mesh needs time to be production-ready. So we thought a service mesh might be overkill in our case and we tried to add HAProxy as Kubernetes Egress Controller in our clusters.

We configured some applications to send a few outgoing requests to Egress Controller. The latter was configured to do TCP re-use and to forward to desired endpoints.

Effects

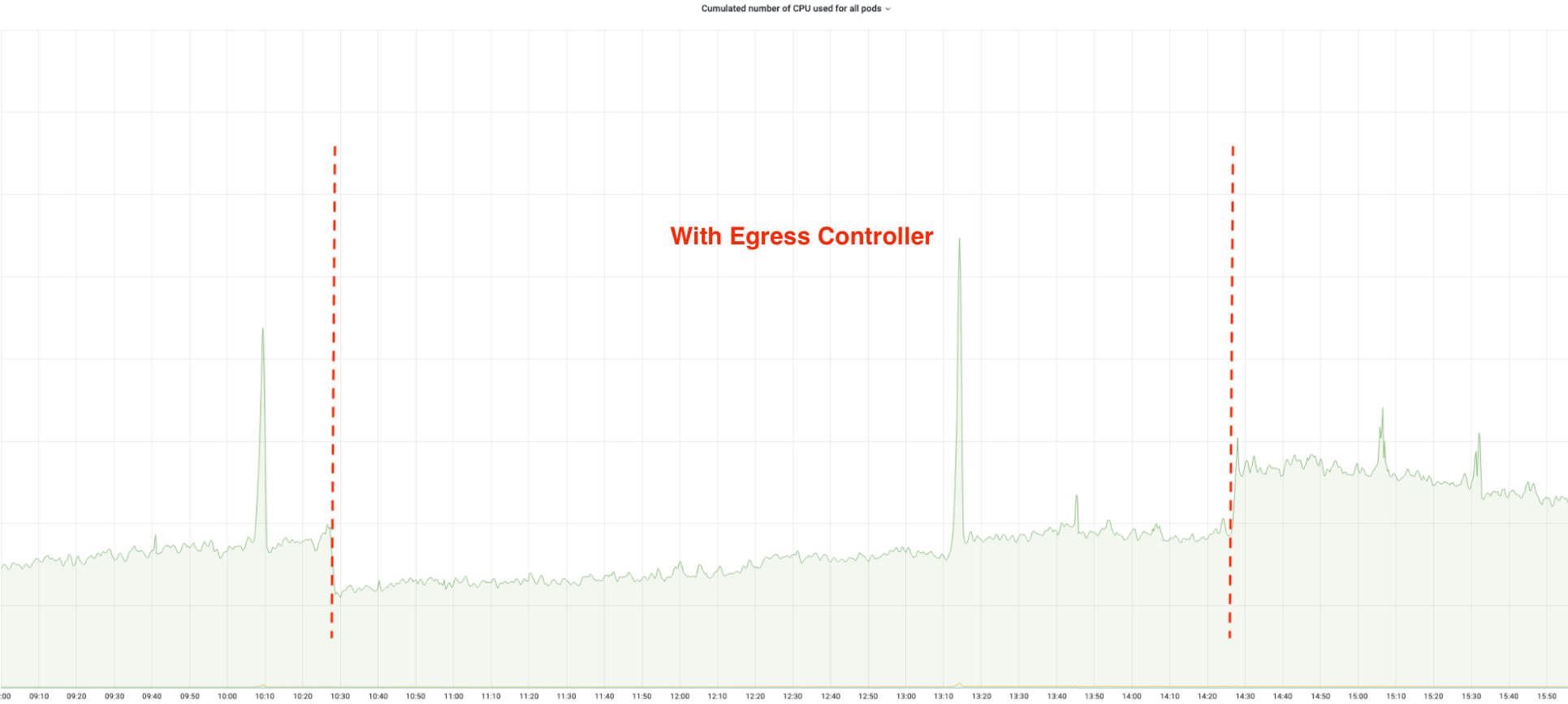

With this optimization, we don’t encounter ErrorPortAllocation anymore. Requests duration are reduced by 20 to 30%, and apps are consuming less CPU. Ressources were spent to instantiate a new TLS connection, which is now handled by Egress Controller.

Detailed configuration

We generally prefer to use what already exists rather than starting from scratch, so we tried to see if HAProxy Kubernetes Ingress Controller could be used as egress.

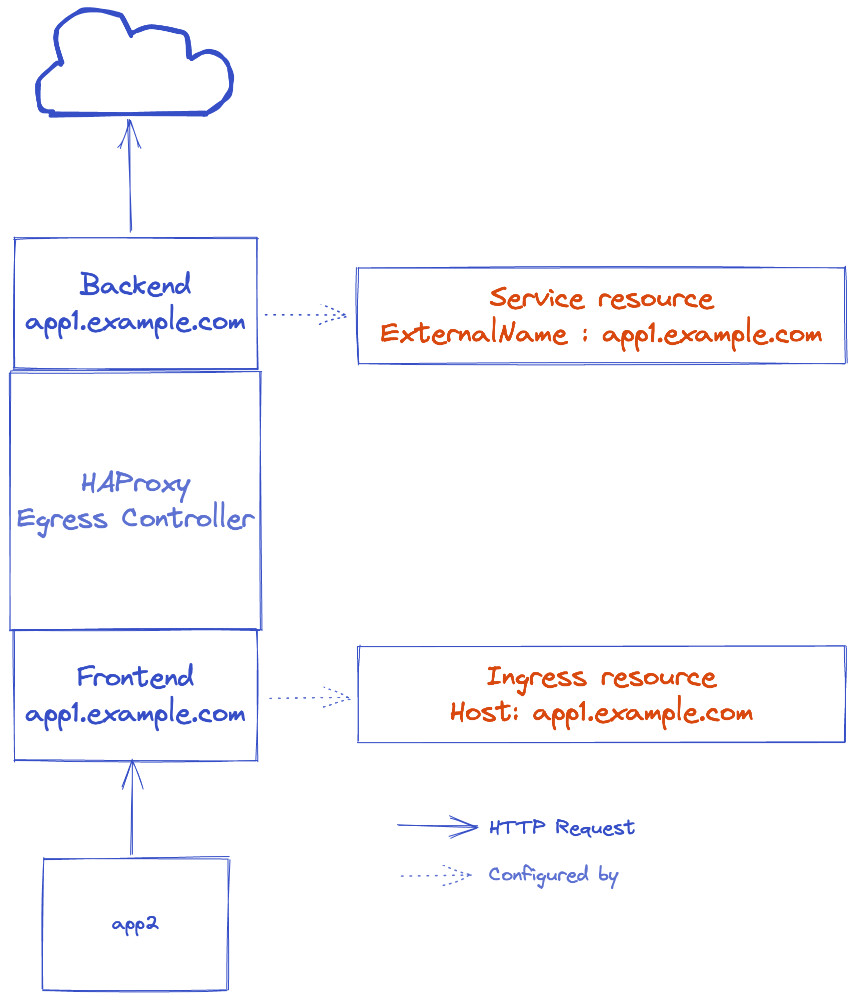

HAProxy Ingress Controller loads its frontend domains in Ingress resource, and loads backend servers in the associated Service resource. To inject an external domain as a backend server, we have to use Service ExternalName.

To use HAProxy Kubernetes Ingress Controller as an Egress Controller, we will use Ingress Kubernetes resource as Egress to define domains handled by the Controller.

---

apiVersion: v1

kind: Service

metadata:

name: app1

spec:

type: ExternalName

externalName: app1.example.com

ports:

- name: https

protocol: TCP

port: 443

targetPort: 443

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: app1

annotations:

haproxy.org/server-ssl: "true"

haproxy.org/backend-config-snippet: |

# See this article for the deep reasons of both parameters: https://www.haproxy.com/fr/blog/http-keep-alive-pipelining-multiplexing-and-connection-pooling/

# enforce SNI with the Host string instead of the 'Host' header, because HAProxy cannot reuse connections with a non-fixed Host SNI value.

default-server check-sni app1.example.com sni str(app1.example.com) resolvers mydns resolve-prefer ipv4

# make HAProxy reuse connections, because the default safe mode reuses connections only for the same source.ip

http-reuse always

spec:

rules:

- host: app1.example.com

http:

paths:

- backend:

serviceName: app1

servicePort: 443

When everything is ready, you will be able to send requests:

curl -H "host: app1.example.com" https://haproxy-egress.default.svc.cluster.local/health

By default, HAProxy resolves domain names only at bootime. But it can be configured to resolves during runtime by adding a config snippet to Egress Controller configuration:

global-config-snippet: |

resolvers mydns

nameserver local <MY_DNS>:53

Conclusions

It seems surprising to reduce requests latency by adding a hop in a network. But it does really work, even if it has some limits.

The main problem with this approach is the fact that we are effectively creating a Single Point of Failure in our clusters if we choose to send all our egress traffic through it. Instead, we are carefully selecting applications that should use an Egress Controller to refine the configuration little by little. Some applications are tightly tied to external services and would massively gain from this and others would only be less resilient.